Explore how voice cloning technology and zero-shot learning fuel audio deepfakes

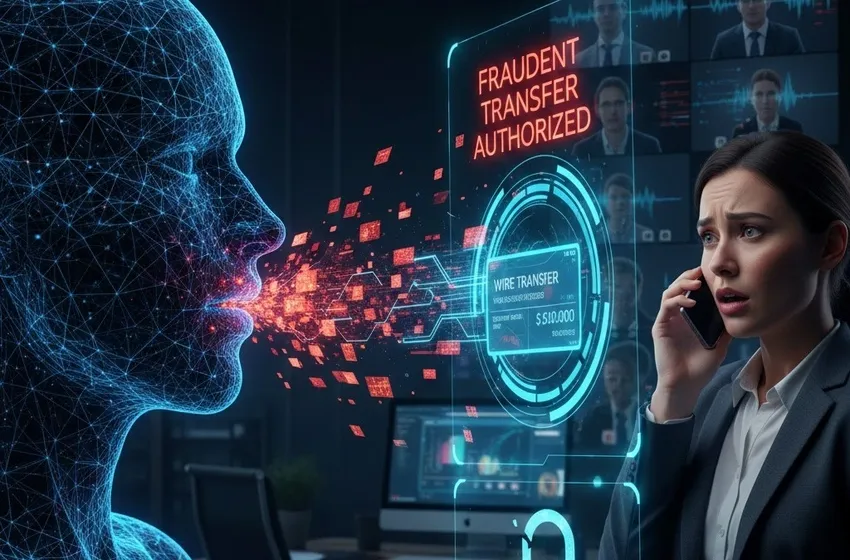

The Threat of Deepfake Voice Synthesis: A New Era of Cyber Deception

In an era where digital transformation defines every facet of our lives, the human voice—once considered a unique and unhackable biological identifier—is under siege. The rise of audio deepfakes has shifted from the realm of science fiction and Hollywood entertainment into a formidable cybersecurity threat. By leveraging sophisticated AI, malicious actors can now replicate a person’s vocal identity with chilling precision, leading to a surge in high-stakes phishing scams and corporate espionage.

As we navigate 2025, understanding the mechanics of voice cloning technology and the financial devastation it can cause is no longer optional for businesses or individuals; it is a critical necessity for digital survival.

The Engine of Deception: How Voice Cloning Works

At its core, modern voice cloning technology relies on advanced deep learning architectures, specifically Generative Adversarial Networks (GANs) and neural vocoders. Unlike early text-to-speech systems that sounded robotic and monotone, today’s models capture the subtle "prosody" of human speech—the rhythm, stress, and intonation that make a voice uniquely yours.

Zero-Shot Learning: The 3-Second Threat

The most significant breakthrough in this field is zero-shot learning. In traditional machine learning, a model would require hours of high-quality audio recordings to "learn" a specific voice. However, zero-shot learning allows an AI model to replicate a target's voice after being exposed to a remarkably small sample—sometimes as little as three to five seconds of audio.

By analyzing this tiny "acoustic fingerprint," the AI can generalize the speaker's characteristics and apply them to any arbitrary text or real-time speech input. This means that a single TikTok video, a recorded webinar, or even a brief "Hello?" on a cold call provides sufficient data for an attacker to create a permanent, digital twin of your voice.

The Anatomy of a Cybersecurity Threat

The transition of synthesized audio into a weaponized cybersecurity threat has been rapid. Attackers are no longer just "spoofing" phone numbers; they are "spoofing" identities. This evolution has birthed a new generation of social engineering attacks that bypass traditional mental filters.

The Rise of Sophisticated Phishing Scams (Vishing)

While email phishing remains common, "vishing" (voice phishing) powered by AI is far more persuasive. Human psychology is hardwired to trust the voices of authority figures and loved ones. When a finance manager receives a call that sounds exactly like their CFO, the instinct to comply overrides the instinct to verify.

-

CEO Fraud: Attackers clone the voice of a high-ranking executive to authorize "urgent" and "confidential" wire transfers.

-

The "Grandparent" Scam: On an individual level, scammers target the elderly with cloned voices of grandchildren claiming to be in an emergency, demanding immediate payment via untraceable methods like cryptocurrency or gift cards.

Financial Impact: Beyond Small Change

The financial stakes are staggering. In early 2024, a multinational firm in Hong Kong lost approximately $25.6 million after an employee was tricked by a video conference where every participant—except the victim—was a deepfake. While video played a role, the realistic, synthesized voices were the primary hook that validated the deception. Research shows that the average loss for businesses per successful deepfake incident now exceeds $500,000, making it one of the most lucrative vectors for organized cybercrime.

Verification Methods: Fighting AI with AI

As the threat landscape evolves, so too must our defenses. Relying on "the human ear" is no longer a viable strategy, as modern audio deepfakes can achieve a 95% match rate that is indistinguishable to most people.

Technical Detection and Watermarking

To counter this, organizations are implementing specialized verification methods designed to spot the "ghosts in the machine."

-

Liveness Detection: Advanced systems analyze audio for "micro-tremors" and harmonic structures that occur naturally in human vocal cords but are absent in synthetic recreations.

-

Digital Watermarking: Some AI developers are now embedding inaudible high-frequency signals into generated audio. These act as a digital "tamper-evident" seal, allowing detection software to immediately flag the content as synthetic.

-

Metadata and Artifact Analysis: Specialized algorithms scan for "spectral artifacts"—tiny inconsistencies in the audio waveform that are generated during the synthesis process.

The "Human Firewall" and Out-of-Band Verification

Despite technical advancements, the most effective defense remains a combination of policy and skepticism. Businesses are moving toward out-of-band verification, where any sensitive request made over the phone must be confirmed through a secondary, pre-approved channel (such as an internal encrypted chat or a pre-shared verbal "codeword").

Conclusion: Preparing for a Synthetic Future

The threat of audio deepfakes is not a temporary trend; it is the new baseline for digital risk. As voice cloning technology becomes more accessible, the barrier to entry for cybercriminals continues to drop. For individuals, the era of "trust but verify" has been replaced by "verify, then trust." For businesses, integrating robust verification methods and treating voice as a sensitive data point—rather than a secure credential—is the only way to mitigate this growing cybersecurity threat.