Explore Neuro-symbolic AI architectures, a hybrid AI approach combining the learning power of neural networks with the logic

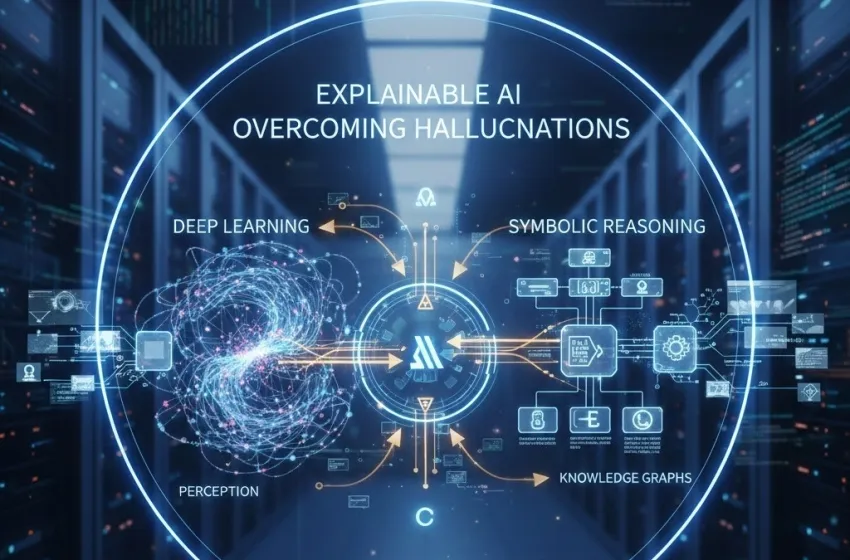

The modern era of Artificial Intelligence has been largely defined by the extraordinary success of deep learning and neural networks. These models have achieved remarkable feats in perception-based tasks—from image recognition and natural language processing to complex game-playing. However, their triumph has revealed a fundamental limitation: the lack of transparency, logical reasoning, and verifiability inherent in their "black box" nature. Enter Neuro-symbolic AI (NSAI), a paradigm that represents a transformative leap forward by architecting systems that combine the learning power of neural networks with the logic and reasoning of symbolic AI to create more robust, verifiable, and explainable systems.

This emerging field of hybrid AI seeks to merge the statistical, pattern-matching prowess of neural networks with the explicit knowledge representation and logical inference of classical symbolic reasoning. The goal is to build intelligent systems that can not only "see" and "learn" from vast amounts of data but also "think," "reason," and "explain" their decisions in a human-understandable way.

The Core Synergy: Neural Networks Meet Symbolic AI

The integration of neural and symbolic methods is often compared to Daniel Kahneman’s dual-process theory of human cognition:

-

System 1 (Neural): Fast, intuitive, and unconscious. This maps to the deep learning component, which excels at high-dimensional pattern recognition, feature extraction, and handling noisy, unstructured data (like raw images or text).

-

System 2 (Symbolic): Slower, deliberate, logical, and explicit. This corresponds to the symbolic reasoning component, which uses formal logic, rules, and structured knowledge to perform inference, planning, and deductive thought.

The resulting synergy overcomes the principal weakness of each isolated approach. Purely neural systems struggle with generalization outside their training data, lack explainable AI (XAI) capabilities, and are prone to making confidently wrong, illogical mistakes, known as hallucinations. Purely symbolic systems, on the other hand, are brittle, require manual encoding of vast amounts of expert knowledge, and struggle to process raw, unstructured, "perceptual" data.

By integrating the two, Neuro-symbolic AI models can leverage the best of both worlds:

-

Perception and Feature Extraction (Neural): Recognizing a cat in an image.

-

Reasoning and Inference (Symbolic): Applying the logical rule, "If an object is a Cat AND has a Tail, then it is a Feline."

Neuro-Symbolic AI Architectures: Design Principles

The field of Neuro-symbolic AI is characterized by a spectrum of integration methodologies, ranging from loosely coupled to deeply integrated systems. Key architectural designs focus on how the neural and symbolic components interact and exchange information.

Sequential and Loose Hybrid Architectures

In these models, the two components operate in sequence or are loosely connected.

-

Neural → Symbolic: A neural network acts as a perception module, extracting high-level concepts or symbols from raw data, which are then passed to a symbolic engine for logical reasoning.

-

Example: A neural network identifies objects (e.g., "blue cube," "red sphere") and their relations in an image, and a symbolic reasoning engine (e.g., a rule-based system) then answers a logical question like, "Is the blue cube to the left of the red sphere?" (This is often seen in early visual question-answering systems).

-

-

Symbolic → Neural (or Symbolic[Neural]): The symbolic system guides or constrains the neural component.

-

Example: AlphaGo used Monte Carlo Tree Search (symbolic reasoning and planning) to explore the game tree, but its evaluation of board positions relied on a trained Convolutional Neural Network (the neural component).

-

-

Neural ∣ Symbolic (Independent Modules): The neural component and the symbolic component can call each other as needed, often through an API or "plugin."

-

Example: A Large Language Model (neural) might encounter a mathematical query and use a symbolic calculator (e.g., Wolfram Alpha) to perform the computation, integrating the result back into its natural language response. This is crucial for improving factual accuracy and overcoming hallucinations in LLMs.

-

Tightly Integrated and Deep Architectures

These systems blend the neural and symbolic components more deeply, often by making the symbolic operations differentiable or embedding symbolic structures directly into the neural network.

-

NeuralSymbolic: The neural network is structurally derived from or directly encodes symbolic rules or knowledge.

-

Example: Logic Tensor Networks (LTN) encode logical formulas as constraints within a neural network, allowing the system to simultaneously learn from data and satisfy explicit logical rules. The logical rules are "compiled" into differentiable terms that influence the training process, ensuring that the learned model adheres to the domain's knowledge graphs or logical axioms.

-

-

DeepProbLog: Combines deep learning with probabilistic symbolic reasoning. It uses neural networks to determine probabilities that are then used in a probabilistic logic programming framework. This allows the system to learn from data while performing logical and probabilistic inference, offering a robust approach to handling uncertainty.

Key Benefits: Robustness, Verifiability, and Explainability

The primary motivation for developing Neuro-symbolic AI is to solve the major deficiencies of pure deep learning, namely in the areas of explainable AI (XAI), verifiability, and robustness.

The Power of Explainable AI (XAI)

The most significant advantage of Neuro-symbolic AI is its intrinsic capacity for explainable AI (XAI). A pure neural network provides a prediction, but the rationale is opaque—a black box. A neuro-symbolic system, however, can trace its conclusion back through the explicit steps of the symbolic reasoning engine.

-

Traceable Reasoning: If a neuro-symbolic medical system diagnoses a disease, the symbolic component can provide a "proof trace" or a chain of rules and facts from the knowledge graphs used to reach the decision. For instance: "The patient has Disease X because (Rule 1: Symptom A and Symptom B are present) AND (Fact from Medical KB: Symptom A is a definitive marker for X) AND (Neural Module Output: Image pattern confirmed presence of related biomarker Z)."

-

Trust and Compliance: This transparency is essential for high-stakes domains like medicine, finance, and autonomous driving, where trust, accountability, and regulatory compliance (e.g., GDPR's "right to explanation") are non-negotiable.

Overcoming Hallucinations and Improving Verifiability

One of the most pressing issues with modern Large Language Models (LLMs) is the phenomenon of hallucinations—generating fluent, confident, but factually incorrect information. This stems from the model's reliance on statistical pattern matching rather than checking against verifiable facts.

-

Factual Grounding: Neuro-symbolic AI directly combats hallucinations by enforcing factual constraints through the symbolic reasoning component. By connecting the neural language model to a structured knowledge graph or a formal logic base, the system can verify the truth value of a generated statement before output.

-

Improved Robustness: The logical component acts as a safeguard, ensuring that the system's output is consistent with a set of pre-defined rules, domain knowledge, and common sense. This makes the overall system far more robust and less prone to brittleness when encountering adversarial attacks or data slightly outside its training distribution.

Enhanced Learning and Generalization

Neuro-symbolic AI facilitates faster learning with less data. By injecting high-level, human-expert knowledge into the system through knowledge graphs and logical rules, the neural component doesn't have to learn these fundamental concepts from scratch. This leads to:

-

Zero-Shot/Few-Shot Learning: The system can generalize to new tasks or concepts based on the abstract, logical structure it already possesses, requiring only a few new examples or even none at all.

-

Transfer Learning: The symbolic rules, being domain-agnostic or easily adaptable, can be transferred to new problems, significantly accelerating development and deployment in diverse fields.

Conclusion: The Path to Human-Level Intelligence

Neuro-symbolic AI is not merely an incremental improvement; it is an architectural imperative for achieving the next generation of intelligent systems. By embracing this hybrid AI approach, we move away from purely statistical correlation and towards a system that integrates true cognitive intelligence: combining the learning power of neural networks with the logic and reasoning of symbolic AI.

The successful implementation of these architectures, leveraging concepts like knowledge graphs and deep logical integration, promises systems that are not only high-performing but also trustworthy, verifiably correct, and capable of complex symbolic reasoning. This unified paradigm offers the clearest path toward fulfilling the long-standing goal of AI: creating systems with human-like comprehension, a goal that is fundamentally reliant on the ability to learn patterns and reason logically, all while maintaining the transparency required for true explainable AI (XAI). The challenge of overcoming hallucinations and ensuring AI safety makes Neuro-symbolic AI the definitive direction for research and development in the years to come.