Master Green Computing, energy-efficient hardware, and sustainable software.

The digital age, characterized by exponential growth in computing power, cloud services, and artificial intelligence, has brought unprecedented advancements. However, this progress is underpinned by a significant, and often overlooked, environmental cost. The information technology (IT) sector's energy consumption, particularly within vast data center complexes, contributes substantially to global greenhouse gas emissions. Addressing this challenge is not merely an option but a global imperative, driving the movement toward Energy-Efficient Computing, a critical pillar of Green Tech. This comprehensive approach, often termed Green computing, focuses on environmentally sustainable practices throughout the entire lifecycle of IT resources—from design and manufacturing to use and disposal. The core mission is clear: to drastically reduce the carbon footprint reduction of our digital world without compromising performance.

Energy-Efficient Hardware: The Foundation of Green Computing

The first line of defense in the quest for sustainable IT lies in the physical components themselves. Energy-efficient hardware forms the bedrock of Green computing, focusing on maximizing computational output per watt of power consumed. This involves a radical shift in design philosophy, moving away from systems built purely for raw speed toward those optimized for power efficiency.

Low-Power Processors and Accelerators

Processors (CPUs and GPUs) are arguably the most power-hungry components in any computing system. Historically, designers prioritized clock speed, leading to high thermal design power (TDP) and increased energy waste. The shift now is toward architectures designed from the ground up for efficiency:

- Custom ARM-based Architectures: Chips like Apple's M-series and custom processors used by cloud providers (e.g., Google’s Tensor Processing Units or TPUs) have demonstrated that high performance can be achieved at significantly lower power envelopes compared to traditional x86 counterparts. These designs leverage a simplified instruction set (RISC) and integrate components like memory and graphics, reducing power-sapping data transfers.

- Specialized AI Accelerators: The immense computational demands of AI training and inference necessitate specialized hardware. Dedicated AI chips and optimized GPUs are designed to handle billions of operations per second with maximum power efficiency, offering superior performance-per-watt for machine learning workloads.

Storage and Peripherals

The green transition extends beyond the processor. Storage and peripheral devices also play a crucial role:

- Solid State Drives (SSDs): Replacing traditional Hard Disk Drives (HDDs) with SSDs offers significant power savings. SSDs contain no moving parts, consuming less energy and operating much faster, which allows systems to complete tasks quickly and return to a low-power idle state.

- High-Efficiency Power Supplies: Power Supply Units (PSUs) convert alternating current (AC) from the wall into the direct current (DC) needed by components. Inefficient PSUs waste a large portion of electricity as heat. Certifications like 80 PLUS Platinum or Titanium ensure that power supplies operate at peak efficiency (often $\ge 90\%$ efficiency even at low loads), reducing waste heat and overall power draw.

- Dynamic Voltage and Frequency Scaling (DVFS): Modern hardware incorporates sophisticated power management features. DVFS dynamically adjusts the operating voltage and clock speed of the CPU/GPU based on the immediate workload. When the system is idle or under a light load, power is drastically reduced, enabling real-time optimization of energy consumption.

Developing Energy-Efficient Algorithms and Sustainable Software

While hardware provides the foundation, true Green computing is realized through sustainable software and efficient operational practices. The most efficient hardware is useless if the software running on it is wasteful and poorly optimized. This means developing algorithms and hardware designed to reduce energy consumption by minimizing unnecessary computations and resource usage.

Algorithmic Efficiency

The efficiency of an algorithm is paramount. A computationally expensive algorithm running on the most efficient hardware can still consume more energy than a highly optimized algorithm on older hardware.

- Complexity Reduction: Developers are now prioritizing algorithms with lower computational complexity. For instance, an $O(n \log n)$ sorting algorithm is drastically more efficient than an $O(n^2)$ algorithm for large datasets, leading to fewer processing cycles and lower power consumption.

- Energy-Aware Programming: This involves writing code that minimizes memory access (a major power consumer) and avoids redundant calculations. Compilers are also becoming "energy-aware," optimizing machine code not just for speed, but for lower power usage.

- Model Pruning and Quantization in AI: A key strategy for addressing the large carbon footprint of data centers and AI training involves optimizing large machine learning models. Model pruning removes redundant or less important connections (weights) in a neural network, while quantization reduces the precision of numbers used in calculations (e.g., from 32-bit to 8-bit). Both techniques can dramatically shrink the model size and reduce the computational resources and energy needed for both training and inference, often with minimal loss of accuracy.

Resource and Deployment Optimization

The way software is deployed and manages resources is just as crucial as the code itself.

- Virtualization and Containerization: These techniques improve server utilization by allowing multiple virtual operating systems or applications to run simultaneously on a single physical server. This resource optimization reduces the number of physical machines required, cutting down on power consumption, cooling needs, and physical space, thereby reducing the IT infrastructure's overall carbon footprint.

- Serverless Computing and Function-as-a-Service (FaaS): In serverless architectures, resources are only allocated and consumed when a function is actively running. When idle, the function consumes zero energy, providing a powerful model for maximizing energy efficiency in cloud environments by eliminating constant server idle power.

- Intelligent Load Scheduling: Systems can be programmed to dynamically schedule intensive workloads for times when electricity demand is low (and thus often cheaper and cleaner) or to locations powered by renewable energy. This strategic timing, sometimes called "carbon-aware scheduling," directly contributes to carbon footprint reduction.

Data Center Optimization and Power Usage Effectiveness (PUE)

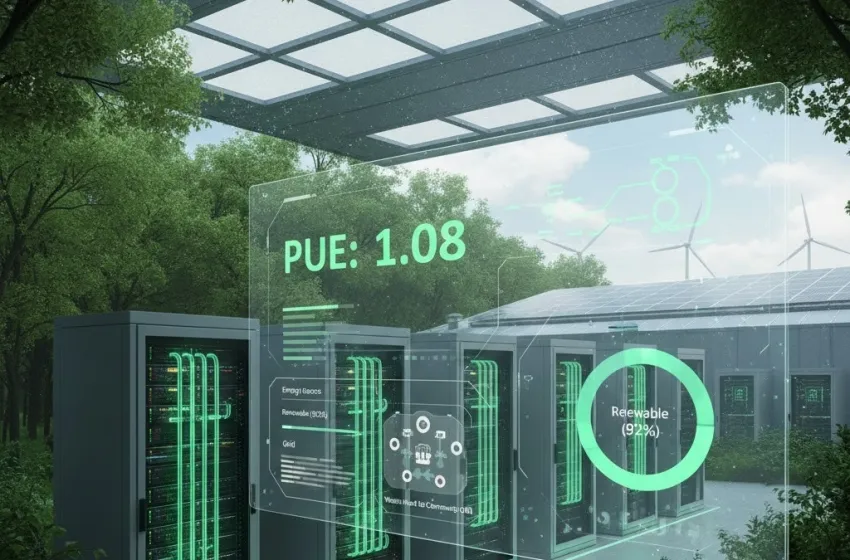

Data centers are the industrial heart of the digital world, and their enormous energy appetite—often consuming more power than small cities—makes them the primary target for Green Tech innovation. Data center optimization is centered on the key metric of Power Usage Effectiveness (PUE).

Understanding and Improving PUE

Power Usage Effectiveness (PUE) is the globally accepted standard for measuring data center energy efficiency. It is calculated as the ratio of the Total Facility Energy (energy entering the building, including IT equipment, cooling, lighting, etc.) to the IT Equipment Energy (energy used solely by servers, storage, and networking).

$$PUE = \frac{\text{Total Facility Energy}}{\text{IT Equipment Energy}}$$

An ideal PUE is 1.0, meaning all energy is used by the IT equipment with no overhead. A PUE of 2.0 means that for every watt consumed by computing, another watt is consumed by overhead (primarily cooling and power conversion). Global data centers typically have an average PUE of around 1.55, but the goal of green data centers is to consistently operate as close to 1.0 as possible, typically targeting below 1.2.

Advanced Cooling Technologies

The single largest consumer of non-IT energy in a data center is the cooling system, often accounting for $30\% \text{ to } 50\%$ of the total facility power. Innovative cooling methods are essential for lowering PUE:

- Free-Air Cooling: This technique uses filtered outside air to cool the facility, dramatically reducing the need for mechanical chillers. This is particularly effective in cooler climates, where it can be used for most of the year.

- Hot/Cold Aisle Containment: Physical barriers (like curtains or panels) are used to separate the hot exhaust air from the cold intake air, preventing mixing and ensuring that only the IT equipment is cooled. This optimization significantly increases the efficiency of the cooling systems.

- Liquid Cooling: As server density and heat generation increase—especially with high-power GPUs used in AI—liquid cooling becomes the most efficient solution.

- Direct-to-Chip Liquid Cooling: A coolant (like water or dielectric fluid) is pumped directly to a cold plate attached to the CPU/GPU, capturing heat at the source.

- Immersion Cooling: Server racks are fully submerged in a thermally conductive, non-electrically conductive liquid. Liquid cooling is vastly more efficient than air cooling, enabling higher server density and achieving PUE values very close to 1.0.

Location, Design, and Waste Heat Utilization

Strategic design and location are key elements of Green computing:

- Location Optimization: Placing data centers in naturally cooler climates reduces the energy required for cooling. Additionally, locating them near abundant renewable energy sources (like hydro-power in the Nordics or geothermal in Iceland) dramatically reduces their carbon footprint.

- Utilizing Renewable Energy: Tech giants are increasingly committing to powering their data center optimization efforts entirely with renewable energy sources—solar, wind, and hydro—often procured through Power Purchase Agreements (PPAs) or generated on-site.

- Waste Heat Reuse: The heat exhausted from servers is a vast, underutilized energy source. Green Tech solutions focus on capturing this waste heat and repurposing it, for instance, to heat office buildings, greenhouses, or even nearby residential communities. This concept of Energy Reuse Effectiveness (ERE), where waste heat is utilized for a beneficial purpose, represents the ultimate efficiency gain, moving beyond simply reducing energy consumption to recycling it.

The Future of Green Computing: AI, Edge, and Circularity

The evolution of Green computing continues with emerging technologies and paradigm shifts that promise even deeper carbon footprint reduction.

The Paradox of AI and Sustainability

While AI itself is an energy sink—with the carbon footprint of data centers and AI training rapidly growing due to the massive scale of large language models (LLMs)—it is also one of the most powerful tools for sustainability.

- AI for Energy Management: AI-driven management systems can monitor thousands of data points in real-time (temperature, humidity, workload) to predict cooling needs and dynamically adjust fan speeds, chiller operations, and server power states. Google's DeepMind famously used AI to cut the energy used for cooling its data centers by $40\%$.

- Algorithmic Innovation: Researchers are actively exploring more energy-efficient models, such as neuromorphic computing, which mimics the human brain's energy efficiency, and transfer learning, which reduces the need for expensive training from scratch.

Edge Computing and Decentralization

Edge computing moves processing closer to the data source (e.g., IoT devices, smartphones), rather than routing everything to a centralized cloud data center. By reducing the distance data must travel and avoiding the overhead of massive core facilities, edge computing can significantly cut down on network energy consumption and data processing energy, further contributing to Green computing goals.

Lifecycle Management and E-Waste Reduction

Green computing extends to the end of a product's life. The principle of a circular economy is essential for true sustainability:

- Extended Product Lifespan: Promoting the repair, upgrade, and reuse of devices slows the cycle of consumption and reduces the energy and resource intensity of manufacturing new energy-efficient hardware.

- Responsible Disposal: For hardware that cannot be reused, proper recycling of electronic waste (e-waste) is critical to prevent hazardous materials from entering landfills and to reclaim valuable rare earth metals and components.