Explore 50 essential Computational Social Science tools for large-scale data analysis, social simulation, agent-based modeling, and policy prediction, ensuring ethical data use.

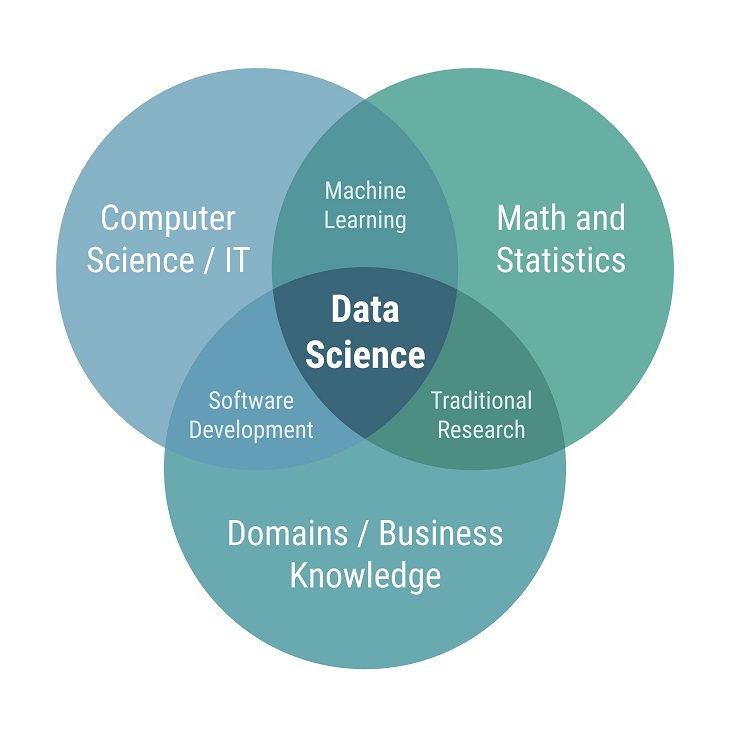

Computational social science (CSS) is a rapidly evolving interdisciplinary field that sits at the nexus of social science, computer science, and data science. It is fundamentally transforming our capacity to observe, model, and understand complex human behaviors and societal structures. At its core, CSS moves beyond traditional, small-scale observational studies by leveraging advanced computing, AI, and large datasets to model, simulate, and understand complex human and societal behaviors. This algorithmic lens allows researchers to tackle previously intractable problems, from predicting the spread of information online to modeling the dynamics of political polarization and urban migration. The array of tools and techniques available to the computational social science practitioner—from specialized simulation platforms to powerful machine learning libraries—is what enables this scientific revolution.

The Core Pillars of Computational Social Science

Computational social science relies on three primary methodological pillars, each supported by a robust toolkit of computational techniques:

1. Large-Scale Data Analysis and Empirical Research

The foundation of CSS is large-scale data analysis, often referred to as "Big Data." This involves collecting, cleaning, and analyzing massive digital footprints left by human activity, such as social media posts, web searches, government records, and mobile phone data.

Key Toolsets:

- Programming Languages and Environments: Python (with libraries like Pandas, NumPy, scikit-learn, and networkx) and R (with packages like ggplot2 and tidyverse) are the foundational languages. They offer the necessary libraries for everything from data manipulation to sophisticated machine learning model building.

- Data Acquisition and Management: Tools for web scraping (e.g., Beautiful Soup, Scrapy), working with APIs (for platforms like Twitter or Reddit), and managing large data sets like SQL databases or NoSQL systems (like MongoDB) are essential. Frameworks like Apache Hadoop and Apache Spark are used for distributed processing of massive, unstructured datasets.

- Text and Content Analysis: Techniques like Natural Language Processing (NLP) are crucial for extracting meaning and emotion from text-based digital data. Tools like the Linguistic Inquiry and Word Count (LIWC) or various pre-trained language models (like BERT or GPT) are used for sentiment analysis, topic modeling, and named entity recognition.

By applying these tools, researchers can identify patterns, correlations, and emerging trends in human behavior that would be invisible in smaller, traditional surveys. This empirical arm of CSS provides the data-driven evidence necessary to refine and validate social theories.

2. Social Simulation and Agent-Based Modeling

While large-scale data analysis focuses on what has happened, social simulation and agent-based modeling (ABM) focus on understanding why it happened and what might happen next.

Key Toolsets:

- Agent-Based Modeling (ABM) Platforms: Agent-based modeling is a computational technique used to simulate the actions and interactions of autonomous individuals (agents) to understand how macroscopic phenomena emerge from microscopic behaviors.

- NetLogo: A widely used, user-friendly, and open-source platform specifically designed for agent-based modeling and social simulation. It is excellent for visualizing how local rules among agents lead to complex global patterns.

- Mesa (Python): A module for writing ABMs in Python, making it accessible to those already familiar with the language's data science ecosystem.

- Repast: A more powerful, flexible toolkit for constructing large-scale ABMs, often used for sophisticated social simulation projects.

- System Dynamics: While different from ABM, System Dynamics (tools like Vensim or Stella) is another form of social simulation that models complex systems using stocks, flows, and feedback loops to understand system-level variables over time.

These simulations act as "artificial worlds" for conducting virtual experiments, allowing researchers to test theories, explore "what-if" scenarios, and gain insight into policy prediction outcomes without the ethical or practical constraints of real-world intervention. Agent-based modeling, in particular, is invaluable for exploring the policy prediction of phenomena like the spread of disease, market crashes, or the adoption of new technologies.

3. Policy Prediction and Complex Systems Analysis

The ultimate goal of much of computational social science is to generate empirically grounded explanations and models for informed decision-making. Policy prediction is where the large data analysis and simulation techniques converge.

Key Toolsets:

- Machine Learning (ML) for Prediction: Libraries like TensorFlow, PyTorch, and Keras are deployed to build and train sophisticated AI models capable of forecasting political outcomes, consumer behavior, or population dynamics. Social network analysis (SNA), using tools like Gephi or the NetworkX Python library, is critical for understanding influence and flow within social systems, which directly impacts policy prediction.

- Causal Inference Tools: While prediction focuses on 'what' will happen, causal inference (using packages like CausalPy or DoWhy) focuses on 'why' and allows researchers to estimate the effect of a specific policy intervention, crucial for responsible policy prediction.

- Visualization Tools: Tableau, Power BI, and visualization libraries in Python (Matplotlib, Seaborn, Plotly) are essential for translating complex data and simulation results into digestible insights for policymakers. A complex model is only useful if its results can be clearly communicated.

Ethical Data Use: The Essential Constraint

The power of computational social science is inseparable from its ethical responsibility. The very nature of large-scale data analysis—often dealing with sensitive, non-consensual digital traces—demands rigorous attention to ethical data use.

Core Ethical Challenges and Tools:

- Privacy and Consent: Since direct informed consent is often impossible with large, organically generated datasets, researchers must employ sophisticated data anonymization and pseudo-anonymization techniques. They must also consider Helen Nissenbaum's concept of contextual integrity, which argues that data should only be used in ways that align with the user's reasonable expectations of data flow, even if the data is technically "public." Tools and regulatory frameworks like GDPR and CCPA provide the legal guardrails, but the ultimate responsibility rests with the researcher's commitment to ethical data use.

- Bias and Fairness: Algorithms and models trained on historical data risk perpetuating or even amplifying societal biases, leading to discriminatory policy prediction outcomes (e.g., in predictive policing or loan applications). CSS practitioners must use specialized tools for algorithmic fairness (e.g., AIF360, Fairlearn) to audit their data and models, and they must prioritize transparency in their methodologies to ensure accountability.

- The Impact of Prediction: Researchers must anticipate the societal impact of their work. A computational social science model designed for policy prediction could influence public opinion or government action. The ethical mandate is to engage in Responsible Research and Innovation (RRI), involving stakeholder engagement and rigorous impact assessments before deploying models. Ethical data use is not an afterthought; it is a fundamental design principle.

50 Computational Social Science Tools (Select Examples)

While a definitive list of 50 tools can be exhaustive, the following categories and specific examples represent the essential toolkit used across computational social science:

| Category | Purpose | Tool Examples |

|---|---|---|

| Programming & Core Analytics | Data cleaning, stats, and base programming. | Python, R, Jupyter Notebook, SQL, MATLAB |

| Big Data & Distributed Computing | Handling petabytes of unstructured data. | Apache Spark, Apache Hadoop (HDFS), Kafka |

| Machine Learning & AI | Predictive modeling and advanced analytics. | TensorFlow, PyTorch, scikit-learn, Keras |

| Social Simulation & ABM | Modeling emergent societal behavior. | NetLogo, Repast, Mesa (Python), Vensim (System Dynamics) |

| Data Acquisition | Collecting digital trace data. | Scrapy, Beautiful Soup, Twitter/Platform APIs, Selenium |

| Network Analysis | Mapping and analyzing relationships/influence. | NetworkX (Python), Gephi, igraph (R/Python) |

| Text Analysis & NLP | Extracting meaning, sentiment, and topics from text. | NLTK (Python), spaCy, Gensim, LIWC |

| Data Visualization | Communicating complex results. | Matplotlib, Seaborn, Tableau, Plotly, D3.js |

| Code Management & Reproducibility | Ensuring transparency and replication. | Git/GitHub, Docker, Virtual Environments |

| Causal Inference & Policy | Estimating the impact of interventions. | DoWhy, CausalPy, R's Tidyverse for fixed-effects models |

The mastery of these tools enables practitioners to execute the core mission of computational social science: moving from raw digital data to robust models that inform our understanding of society, providing powerful capabilities for policy prediction while strictly adhering to principles of ethical data use.

Frequently Asked Questions (FAQ)

1. What is the fundamental difference between traditional social science and computational social science?

Traditional social science typically relies on small-scale primary data collection (surveys, interviews) and analytical methods suited for small, curated samples. Computational social science leverages massive, naturally occurring digital data (large-scale data analysis), advanced AI, and social simulation methods like agent-based modeling to study social phenomena at scale, focusing on complex, emergent system dynamics.

2. How does agent-based modeling contribute to policy prediction?

Agent-based modeling creates virtual societies where individual "agents" follow defined rules (micro-level behavior). By simulating millions of interactions over time, researchers can observe macro-level outcomes (e.g., the spread of a rumor or the impact of a tax policy). This allows for rigorous testing of different policy prediction scenarios that would be too costly or complex to test in the real world.

3. What are the main ethical concerns in computational social science?

The main ethical concerns revolve around ethical data use, specifically privacy (re-identification risk in anonymous datasets), informed consent (since digital trace data is often collected passively), and algorithmic bias (where machine learning models perpetuate societal inequalities, especially in policy prediction applications).

4. Which programming languages are essential for a computational social scientist?

The two most essential programming languages are Python and R. Python is preferred for its robust machine learning and large-scale data processing libraries (Pandas, scikit-learn), while R is widely used for its deep statistical analysis and visualization capabilities.

5. What is the role of Natural Language Processing (NLP) in CSS?

NLP is a critical tool for large-scale data analysis in CSS, enabling researchers to process and analyze vast quantities of unstructured text data (e.g., social media, news archives). It is used for tasks like sentiment analysis, topic modeling, identifying political discourse, and tracking the evolution of cultural trends.

6. How can computational social science model the spread of misinformation?

CSS models the spread of misinformation using social simulation, often employing agent-based modeling where agents are programmed with different susceptibility levels and network structures. The model tracks how a piece of information diffuses through the simulated social network, identifying key "super-spreaders" and structural weaknesses that could be targeted for intervention and policy prediction.

What is the concept of digital trace data in large-scale data analysis?

Digital trace data refers to the massive, passive data generated by human interaction with digital systems (social media likes, phone location, search history, online transactions). This data is non-reactive and voluminous, making it the primary fuel for large-scale data analysis in computational social science.

Explain how contextual integrity relates to ethical data use in CSS.

Contextual integrity is an ethical principle stating that information should be collected and disseminated according to the norms of its original context. For example, using "public" social media posts for research without considering the user's intent when posting (e.g., an expectation of context-limited audience) violates ethical data use, even if legally permissible.

What is a micro-macro link in social simulation?

The "micro-macro link" is the relationship explored by social simulation and agent-based modeling where local interactions between individual agents (the micro-level) give rise to complex, often unexpected, large-scale patterns and outcomes in society (the macro-level).

Which frameworks are necessary for the transparent and reproducible conduct of CSS research?

Frameworks like Git/GitHub for version control, and containerization tools like Docker for managing software environments, are necessary to ensure the code, data, and models used in computational social science are transparent, auditable, and easily replicated by other researchers, upholding scientific rigor and ethical data use.