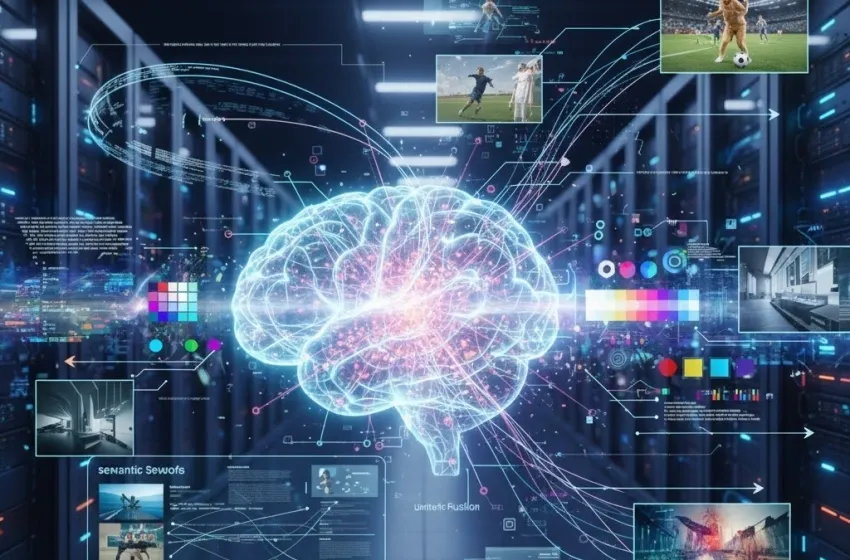

Discover Multi-modal AI and unified models like GPT-4V/Gemini. Learn how data fusion, text-to-video, and cross-domain generation unlock the future of GenAI and semantic search.

The story of Artificial Intelligence has long been one of specialization: one model for text, another for images, and a third for audio. This fragmented approach, however, lacked the holistic understanding that defines human intelligence. Today, we are witnessing a revolutionary convergence powered by Multi-modal AI (MAI). This paradigm shift moves beyond simple text generation to systems that can seamlessly interpret and create content across all data types simultaneously, fundamentally changing how we interact with technology and the world around us. This is the era of unified models, and they are the future of GenAI.

The Dawn of Unified Intelligence: What is Multi-Modal AI?

Multi-modal AI is a class of artificial intelligence that can process, understand, and generate information from more than one modality (data type) at the same time. While first-generation generative AI models, such as basic Large Language Models (LLMs) and simple image generators, were restricted to single domains—text-in, text-out; or text-in, image-out—MAI models are designed to mirror the comprehensive sensory integration of the human brain.

Imagine the simple human act of watching a captioned video. Our brain simultaneously processes the visual stream (video), the auditory stream (sound/speech), and the textual stream (captions), synthesizing them into a single, cohesive narrative. Multi-modal AI achieves this same data fusion digitally.

The true breakthrough is the emergence of unified models (like GPT-4V, which denotes the vision capability added to GPT-4, or Google's Gemini family). These are not separate models simply stapled together; they are built on a single, massive neural network architecture—often an advanced Transformer—that uses shared weights to encode and decode features from all modalities into a common embedding space.

This shared embedding space is critical. It means that an image of a sunset and the textual description "a vibrant orange and purple sunset over the ocean" are represented by nearly identical numerical vectors. This deep semantic alignment allows the model to perform tasks that were previously impossible:

-

Image-to-Text Reasoning: Describing a complex medical scan and drawing conclusions.

-

Audio-to-Video Synthesis: Generating a realistic text-to-video clip where the character's lip movements are perfectly synced to the input audio track.

-

Cross-Domain Generation: Taking a design sketch (image) and generating the Python code (text) to build the corresponding user interface.

This unified approach dramatically enhances the GenAI model's accuracy, robustness, and ability to handle complex, real-world inquiries that inherently involve mixed media.

The Core Mechanism: Data Fusion and Cross-Domain Generation

The engine that powers multi-modal AI is data fusion, the process of integrating and harmonizing diverse data streams. Fusion techniques determine how the separate modal inputs (images, text, audio) are combined to form a richer, joint understanding.

Fusion Techniques: Bridging the Sensory Gap

Researchers generally employ three types of fusion techniques in unified models:

-

Early Fusion: Combining raw data features before they are fed into the main model. For instance, concatenating image pixels and text embeddings at the input layer. This captures tight cross-modal correlations but is highly sensitive to noise and requires precise synchronization.

-

Late Fusion: Merging the outputs or predictions from independently trained, modality-specific models. For example, a system might combine a prediction from an image-recognizer model and a separate prediction from a text-analyst model. This is flexible but may miss deep, nuanced interactions between the modalities.

-

Intermediate/Hybrid Fusion: The most common approach in modern unified models, often leveraging cross-attention mechanisms within the Transformer architecture. Here, the features of one modality (e.g., text) are used to dynamically weight and refine the features of another modality (e.g., image). This is the key to models like Gemini, which use attention to allow the image tokens to "look at" and be influenced by the text tokens, creating a genuinely unified model capable of deep contextual understanding.

Cross-Domain Generation: From Text to Video and Beyond

Once the model has achieved a unified, semantic understanding of its inputs through effective data fusion, it can engage in cross-domain generation. This is the capacity to take an input from one modality and generate a coherent output in an entirely different modality.

The most exciting and computationally demanding example is text-to-video. You give the model a prompt like, "A golden retriever playing soccer in a field during a light rainstorm, filmed in the style of a 1980s VHS tape." The model must:

-

Understand the text prompt (semantics).

-

Recall or generate the visual content (golden retriever, soccer ball, rainstorm).

-

Simulate the physics and temporal sequence (video movement).

-

Apply a specific style filter (1980s VHS grain and color grading).

This is a four-in-one cognitive leap that validates the power of the Multi-modal AI framework, demonstrating that the model can transfer knowledge and style across disparate domains.

The New Standard: Unified Models (GPT-4V and Gemini)

The launch of models like GPT-4V and the Gemini family marked the transition of multi-modal AI from theoretical research to mainstream commercial reality. They exemplify the concept of the unified model, functioning as a single cognitive entity rather than a series of bolted-together components.

Functionality of Unified Models

These models are trained on massive datasets where all modalities are naturally aligned—images with captions, videos with transcripts, and web pages with embedded media. This allows them to perform incredibly complex tasks:

-

Visual Question Answering (VQA): A user uploads an image of a complex circuit board and asks, "What is the function of the component labeled C3 on this schematic?" The model uses its vision encoder to parse the image, uses its language model to understand the question, and combines the two to provide a technical text answer.

-

Real-Time Diagnostics: An AI model can listen to a machine's irregular running sound (audio), cross-reference it with real-time sensor data (numerical), and analyze a video feed of the machine's vibration (video) to diagnose a specific mechanical failure (text output).

-

Code Generation from Sketches: Developers can hand-draw a basic website layout (image/sketch), and the GenAI model can generate the corresponding HTML, CSS, and JavaScript code (text).

This is the power of the unified models—they eliminate the need for manual translation between data types, making technology more intuitive and powerful. The context, relationships, and subtle nuances that are often lost when one data type is converted to another are retained within the single, shared embedding space. This ability to reason across different sensory inputs is what makes these models so much more robust and less prone to "hallucination" when dealing with factual queries.

The Semantic Edge

Crucially, Multi-modal AI achieves its power through deep semantic understanding. Semantic relationships, such as "a red apple" and "a green pear," are defined by their meaning, not just their spelling or pixel arrangement. By mapping all sensory inputs into the same semantic space, the model learns the core meaning of an entity regardless of its presentation format. This ability to learn cross-domain generation and semantic alignment is the key intellectual breakthrough distinguishing unified models from earlier, less capable AI systems.

Multi-Modal AI and the Revolution in Search and Content

The impact of Multi-modal AI extends far beyond creative content generation; it is poised to entirely redefine how we find information through semantic search and how businesses align with user intent keywords.

The Semantic Search Paradigm Shift

Traditional keyword search is brittle. Searching for "best laptop for graphic design" might only return results containing those exact words. Semantic search, however, focuses on intent and meaning.

In a multi-modal world, semantic search takes on new dimensions:

-

Visual Search: A user can upload a photo of a dress worn by a celebrity (image) and ask, "Find me five affordable stores that sell this style of dress, and summarize the customer reviews for each (text)." The Multi-modal AI model processes the image, understands the user's commercial intent, and retrieves text and product information from various domains.

-

Complex Contextual Search: A user provides an audio clip of a foreign language phrase (audio) and an image of the context (e.g., a street sign) and asks, "What does the audio mean, and where is this sign located?" The unified model uses data fusion to combine the auditory data, visual data, and semantic knowledge base to provide a complete answer.

This move from keywords to intent keywords fundamentally alters SEO (Search Engine Optimization). The goal is no longer just optimizing for exact text matches but for semantic depth—creating content that comprehensively answers the user's underlying need across all possible formats (text, charts, videos, etc.) to satisfy a GenAI model's desire for holistic, trustworthy, cross-domain generation information.

Content Strategy: Building for Multi-Modality

Businesses must now optimize for the Multi-modal AI retrieval systems. This means:

-

Topic Clusters & Entity Authority: Building deep, interconnected content that explains all related concepts (entities and attributes) on a topic, providing the comprehensive context that MAI loves.

-

Diverse Content Formats: Providing text, high-quality descriptive images (with rich ALT tags), and summary videos. The single source must be a reservoir of multiple data types for the unified model to draw from.

-

Structured Data: Using Schema markup to explicitly define the relationships between the text, image, and video content, making the data fusion process easier for the GenAI model.

Challenges and The Multi-Modal Future

While the promise of Multi-modal AI is transformative, several challenges remain. The cost of training and operating these unified models is exponentially higher than their single-modal counterparts due to the sheer volume and complexity of the aligned, multi-modal data required. Furthermore, ensuring ethical alignment, avoiding bias in cross-domain generation, and maintaining computational efficiency during real-time data fusion are ongoing areas of research.

The future, however, belongs to these holistic systems. We are already seeing the early stages of text-to-video generation models producing short, high-fidelity clips, but the next step is the creation of entire, interactive virtual worlds from a simple text prompt—a seamless blend of graphics, physics, audio, and narrative.

The ultimate vision is an AI that interacts with the world not just through screens, but through embodied agents, AR/VR environments, and robotics, using its multi-modal capacity to perceive, reason, and act with human-like comprehension. From medical diagnostics that combine lab results (tabular data), scans (image), and patient notes (text) to automated creative processes that start with a mood board (images) and end with a finished advertising campaign (video), Multi-modal AI is the defining technology that will reshape how we create, search, and live in the digital age.