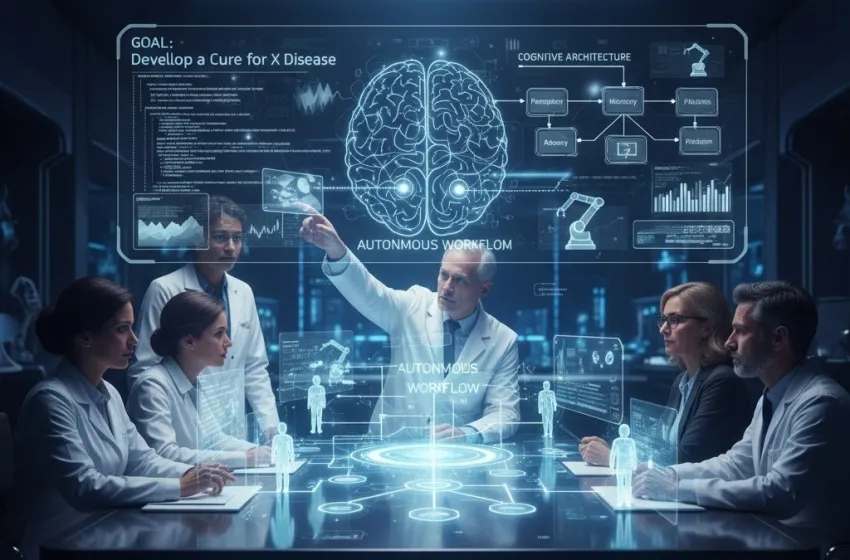

Agentic AI and autonomous agents powered by LLM agents that tackle multi-step tasks with goal-directed AI and sophisticated cognitive architecture

For the past few years, Large Language Models (LLMs) have captivated the world with their ability to generate human-like text, answer questions, and even create art. However, these models, while incredibly powerful, have primarily functioned as sophisticated reactive systems—responding to a single prompt, lacking memory, long-term planning, or the ability to independently pursue complex objectives. This limitation is rapidly being overcome by a new paradigm: Agentic AI.

Agentic AI represents a profound leap forward, transforming static LLMs into dynamic, autonomous agents capable of executing multi-step tasks with minimal human oversight. These agents are not just answering questions; they are planning, reasoning, acting, and learning within complex environments to achieve specific goals. This evolution promises to fundamentally reshape how we interact with technology and how work is performed, ushering in an era of truly autonomous workflows.

Understanding Agentic AI and Autonomous Agents

At its core, Agentic AI refers to AI systems designed to act independently to achieve a defined objective. These are not merely programs; they are intelligent entities equipped with a degree of autonomy, adaptability, and decision-making capability. The key components that elevate an LLM into an autonomous agent typically include:

-

Memory: The ability to retain information from past interactions, observations, and generated thoughts. This can range from short-term context windows to long-term databases or vector stores, allowing the agent to build a persistent understanding of its environment and tasks.

-

Planning and Reasoning: The capacity to break down complex, goal-directed AI objectives into smaller, manageable sub-tasks. This involves an internal thought process where the agent considers potential actions, evaluates their likely outcomes, and sequences them logically.

-

Tool Use: The ability to leverage external tools or APIs (Application Programming Interfaces) to gather information, perform calculations, interact with software, or even control physical systems. This extends the agent's capabilities far beyond text generation.

-

Action and Execution: The mechanism through which the agent interacts with its environment, whether by writing code, sending emails, generating reports, or manipulating digital interfaces.

-

Observation and Self-Correction: The capacity to observe the results of its actions, compare them against its initial plan or goal, identify discrepancies, and adapt its strategy accordingly. This iterative loop is crucial for robust performance in dynamic environments.

The Role of LLM Agents

LLM agents are the most prominent form of Agentic AI currently being developed. Here, the large language model serves as the agent's "brain" or cognitive architecture, providing the core reasoning, planning, and natural language understanding capabilities. The LLM can interpret instructions, generate plans in natural language, decide which tools to use, and even reflect on its own performance.

These LLM agents can be designed with various levels of sophistication. Simple agents might follow a pre-defined sequence of operations, while more advanced agents exhibit emergent behaviors, learning from interactions and refining their strategies over time. The concept of "inner monologue" or "chain of thought" prompting is a crucial technique that allows LLMs to articulate their reasoning process, making their decisions more transparent and their problem-solving more robust.

Cognitive Architecture: The Blueprint of Autonomy

The success of Agentic AI hinges on a well-designed cognitive architecture. This refers to the organizational structure of an intelligent system, outlining how its various components (memory, perception, reasoning, action) interact to produce intelligent behavior. For LLM agents, a typical cognitive architecture might involve:

-

Perception Module: Gathers information from the environment (e.g., user input, data from web searches, outputs from other tools).

-

Working Memory: A short-term buffer for current task context, recent observations, and intermediate thoughts.

-

Long-Term Memory: Stores accumulated knowledge, past experiences, learned patterns, and potentially "skill sets" in a retrievable format (e.g., using vector databases for semantic search).

-

Planner/Reasoner Module: Powered by the LLM, this module takes the current goal and information from memory, then generates a step-by-step plan, selects appropriate tools, and anticipates outcomes.

-

Action Module: Translates the planned actions into concrete commands or outputs, interacting with the real or digital world.

-

Reflexion/Learning Module: Analyzes the outcome of actions, identifies errors or inefficiencies, updates long-term memory, and refines future planning strategies. This iterative self-improvement is key to their adaptability.

This cognitive architecture allows LLM agents to tackle multi-step tasks that would be impossible for a simple prompt-response system. Instead of generating a single output, they engage in a recursive process of thought, action, and observation, much like a human problem-solver.

Autonomous Workflows: The Practical Impact

The rise of Agentic AI is poised to transform autonomous workflows across virtually every industry. An autonomous workflow is a sequence of tasks that can be executed end-to-end by AI agents with minimal or no human intervention.

Examples of Autonomous Workflows in Action:

-

Automated Software Development: An LLM agent could be given a high-level request like "Develop a web application that manages customer orders." The agent would then:

-

Plan the architecture (frontend, backend, database).

-

Research necessary technologies and frameworks.

-

Write the code for different components (using a code interpreter or interacting with IDEs).

-

Test the code, identify bugs, and self-correct.

-

Deploy the application. This represents a significant leap from current code-generating LLMs.

-

-

Dynamic Market Research and Analysis: An autonomous agent could be tasked with "Identify emerging trends in sustainable fashion for Q3 2024 and prepare a summary report." The agent would:

-

Browse relevant news articles, academic papers, and social media trends (using web search tools).

-

Analyze market data and consumer sentiment (using data analysis tools).

-

Synthesize findings, identify key patterns, and generate hypotheses.

-

Draft a comprehensive report, including graphs and data visualizations.

-

Iteratively refine the report based on internal criteria or simulated feedback.

-

-

Personalized Customer Service and Support: Instead of rigid chatbots, LLM agents could act as highly adaptable customer service representatives. They could:

-

Understand complex, multi-part customer queries.

-

Access internal knowledge bases and customer history.

-

Interact with backend systems to process orders, troubleshoot issues, or update accounts.

-

Escalate to human agents only for truly unique or sensitive situations.

-

Learn from each interaction to improve future responses.

-

-

Scientific Discovery and Experimentation: Agentic AI can accelerate scientific research by:

-

Reviewing vast amounts of existing literature to form hypotheses.

-

Designing experiments and simulating outcomes.

-

Controlling laboratory equipment (e.g., robotic arms, automated microscopes).

-

Analyzing experimental data and drawing conclusions.

-

Iterating on experimental design based on results. This offers immense potential in drug discovery, material science, and personalized medicine.

-

Key Concepts and Semantic Search Intens Keywords

The development and deployment of Agentic AI rely on several interconnected concepts and technologies:

-

Reinforcement Learning from Human Feedback (RLHF): Crucial for aligning agent behavior with human values and intentions.

-

Generative AI: The underlying technology powering the LLMs at the heart of agents.

-

AI Orchestration: Managing and coordinating multiple agents or components within a complex workflow.

-

Task Decomposition: The ability to break down a high-level goal into actionable sub-tasks.

-

Tool Learning/Augmentation: Agents learning to use new tools or improving their efficiency with existing ones.

-

Autonomous Systems: Broader term encompassing any self-governing system, with Agentic AI being a specific type.

-

Intelligent Automation: The use of AI to automate complex processes, evolving beyond Robotic Process Automation (RPA).

-

Decision-Making AI: AI systems explicitly designed to make choices and evaluate consequences.

-

AI Planning: The field focused on developing AI agents that can generate plans of action.

-

Self-Improving AI: Agents that learn and get better over time without constant reprogramming.

Challenges and Future Outlook

While the potential of Agentic AI and autonomous workflows is immense, several challenges need to be addressed:

-

Reliability and Robustness: Agents can still hallucinate, get stuck in loops, or make illogical decisions. Ensuring their reliability, especially in critical applications, is paramount.

-

Safety and Control: How do we ensure these autonomous systems act ethically, safely, and within predefined boundaries? Implementing robust guardrails and human oversight mechanisms is crucial.

-

Interpretability and Explainability: Understanding why an agent made a particular decision, especially when complex reasoning is involved, is essential for trust and debugging.

-

Resource Intensity: Running complex LLM agents that engage in multiple rounds of reasoning and tool use can be computationally expensive.

-

Economic and Societal Impact: The widespread adoption of autonomous workflows will undoubtedly lead to significant shifts in the job market, requiring new models of education, training, and economic support.

Despite these hurdles, the trajectory of Agentic AI is clear. Research is rapidly advancing in areas like meta-learning, where agents learn how to learn; multi-agent systems, where groups of agents collaborate to achieve larger goals; and embodied AI, where agents interact with the physical world.

The future of work will not just involve humans interacting with AI tools, but humans collaborating with highly capable autonomous agents. These agents will not merely augment human capabilities; they will take on entire workflows, freeing up human creativity, strategic thinking, and emotional intelligence for tasks that truly require them. The era of truly goal-directed AI and dynamic autonomous workflows is no longer a distant dream but an unfolding reality, promising unprecedented levels of productivity and innovation.